Kickstart Data Quality by Design with Great Expectations

21 December 2022

The importance of data quality is a no-brainer. Especially as data landscapes get more complex by the day, you need to ensure that your data consumers use good quality data to build BI dashboards or Machine Learning models, to give a few examples.

A need for Data Quality by Design

Data quality, however, should not be confined to your Data and IT teams. As those teams deploy their own set of tools to validate your data, information on data quality tends to be available only to the people on those teams.

Data stewards and quality analysts can and should play a vital role in mitigating and remediating data quality issues. Data consumers should also be aware of such issues and be informed automatically. If not, they may start to mistrust your data - a perception that is very hard to overcome, even if data quality is improved. Or they may even place false trust in your data (potentially leading to wrong business decisions or impacting critical processes such as customer communications).

In short, there is a need for Data Quality by Design: going beyond data validation, creating visibility for data quality issues and triggering calls to action for everyone involved, while taking into account how your data flows within your organization. Running a line of code in your data pipeline to validate some business logic is simply not enough. Instead, you need a scalable approach that provides a common way to connect to data sources and inform, centralize, and monitor data quality results.

Overcoming data quality challenges with Great Expectations

Eager to start improving your data Quality? Enter Great Expectations. Great Expectations is an open-source Python library that provides a framework for connecting with data sources, profiling data, identifying data quality rules, and triggering actions such as notifications or integration with your data catalog. Because Great Expectations is flexible and extensible, and with the right technical skillset, it is a great candidate to kickstart your Data Quality by Design projects.

Let’s take a look at how Great Expectations can help you

- discover & validate data quality rules,

- trigger actions to share and monitor data quality results,

- involve the right people.

1. Discovering & validating data quality rules

Great Expectations allows you to connect to many different data sources and empowers a bottom-up approach to Data Quality through interaction with your data.

It uses a SQLAlchemly, pandas or spark engine to execute and validate data quality rules against your data. With Great Expectations, you can connect to various sources, including databases (MySQL, MSSQL, snowflake, ...), cloud storage (Amazon S3, Azure Blob, ...), and in-memory data. That enables you to interact with your data by profiling, testing assumptions, and visualizing data quality metrics. Then, based on the results, you can add data quality rules (or so-called Expectations) in a collection that you can use to validate your data periodically.

Great Expectations allows you to deploy data validations by running a command ad hoc or programmatically as part of your data pipeline.

2. Triggering actions to share and monitor data quality results

Validating your data against data quality rules is not enough to enable Data Quality by Design. You want to ensure that data quality results are shared with the right people in your organization so that they can monitor data quality over time, take action to solve issues, and assess the impact of structural solutions.

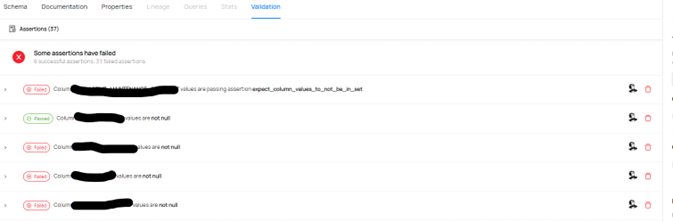

That’s where Great Expectations checkpoints come into play. With a checkpoint, you can configure what validations you will run and which actions you want to trigger based on their outcome.

In addition to triggering standard actions such as informing your data stewards about the latest data quality run (via email, Teams, or Slack), you can also share your data quality results by choosing to refresh your data docs or integrate with your data governance tool of preference. Great Expectations has a connector for sharing the data quality results with Datahub, an open-source data catalog. Integrating your data quality results with other aspects of data governance (Data catalog, data lineage, ownership,…) will substantially improve your level of maturity when it comes to Data Quality.

What Great Expectations is still lacking, though, is the ability to monitor data quality over time and review the impact of structural solutions. But it does provide you with the right building blocks to enable that. For example, you can use the validation results store to choose where to store results after running a data quality validation. By default, results are stored inside your Great Expectations project. You can easily change that to also store them in an Amazon S3 location, an Azure Blob location, or a PostgreSQL database. From there, you can model the results and easily visualize them in your preferred BI solution.

3. Involving the right people

All this is very nice because it enables a more scalable approach to data quality beyond the validations that only your Data and IT teams know about. But there is one essential prerequisite to make it all happen: you need to involve the right people along the way, from start to finish.

A bottom-up, data-driven approach is a great way to discover data quality rules. It enables you to understand your data better and detect issues you didn’t expect initially. Besides the bottom-up approach, it’s also crucial to involve people from your business: domain experts or data stewards that genuinely understand the assumptions, constraints, and business logic to make sure that your data is fit for purpose.

And to ensure that your data quality effort is not lost, it’s crucial that you centralize data quality resolution and monitoring around those domain experts. While they might not always be able to solve the issues themselves, they are best placed in your organization to take action and involve the right people, ensuring data quality issues are getting solved or a structural solution is in place.

Although Great Expectations won't help you find the right people, it provides a framework and a shared language to communicate about data quality between domain experts and data stewards on the one hand and IT teams on the other. And once you've identified the right people, you can ensure that actions triggered by Great Expectations involve your domain experts or data stewards.

Curious about how to get started?

Are you triggered to take action but don't know how to get started with Data Quality by Design? Reach out to us. We’ll be happy to help.