Case studies

Discover how we helped companies with their data and AI projects.

Unlocking Viewer Insights with a Scalable Data Platform

Streamz is the Flemish video-on-demand platform offering local and international series and films through a subscription-based model. Streamz focuses on delivering high-quality content tailored to local audiences, supported by a strategic partnership with Paramount.

Data Engineering

Business Analytics

Data Governance

Boosting Operational Efficiency: Reshaping Workplace Prevention with AI

IDEWE, Belgium's leading external service for workplace prevention and protection, has one clear mission: improve the well-being of employees and workplace environments.

AI & Data Science

Business Analytics

Strengthening Data Trust: Transforming Enterprise Data Governance

Colruyt Group, one of Belgium's leading retail players, has always recognized both the strategic and operational value of data.

Data Governance

Building Customer-Centric Growth through One Shared Data Foundation

Essentiel Antwerp is a familiar name in Belgian fashion and becoming a fashion statement worldwide with 38 flagship stores and more than 900 brand-in brand stores.

Business Analytics

Automating Disability Claims Handling with Intelligent Automation

NN Belgium is a leading insurer supporting customers through life events. Their claims teams handle sensitive dossiers where speed, consistency, and compliance are critical especially in disability claims with complex follow-up and documentation needs.

AI & Data Science

Predicting Platelet Demand: Transforming Healthcare Logistics with Time Series Forecasting

Improving platelet demand planning with time series forecasting to support reliable healthcare logistics.

AI & Data Science

Improving Logistics Operations Through Automated Data Quality Controls

Implementing an End-to-End Automated Solution

Data Governance

AI & Data Science

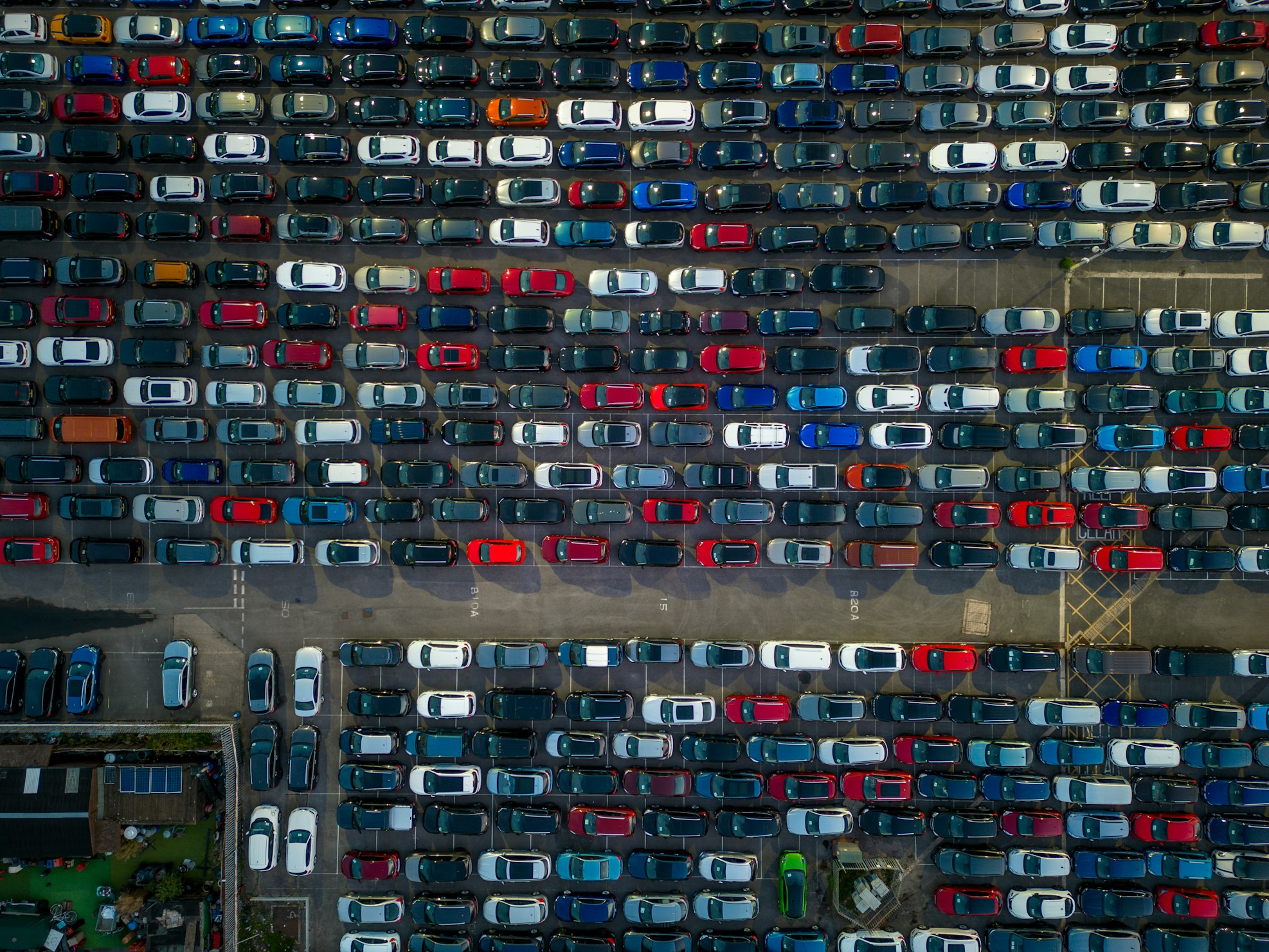

Unifying Customer Data: Transforming Automotive Engagement with 360° Customer Insights

Better-targeted campaigns, deeper customer engagement

Business Analytics

Boosting Tourist Accommodation Occupancy with Data-Driven Insights

Daily delivery of up to 1.300 custom reports to help accommodation owners run their business better

Business Analytics

Elevating Loyalty Programs with AI-Driven Personalization

Data-Driven Strategies for Personalization and Optimization

AI & Data Science

Improving SEO with Generative AI

A step forward in improved online presence

AI & Data Science

AI-powered knowledge, responsibly delivered

The impact of an Internal Chatbot on workplace prevention provider

AI & Data Science

Generating input for a publicly available mobility insights dashboard

Create a scalable and future-proof data architecture based on publicly available requirements

Business Analytics

Governing Retail Data to Support ESG & Regulatory Reporting

Driving Positive Environmental Impact through Effective Data Governance

Data Governance

Scaling Global HCP Engagement with Trusted Interaction Data

Building a Proactive Data Quality Framework

Data Governance

Predicting Viewer Preferences: Transforming Media Engagement with Real-Time Recommendation Models

Algorithms to improve the user experience

AI & Data Science

Introducing a Data Product Approach in Healthcare

We helped a healthcare organization introduce a data product approach to create new data-driven services on a governed, scalable data platform.

Business Analytics

Data Governance

Enhancing Client Services with Data Products-as-a-Service

Deliver data products as a service with minimal IT dependencies

Business Analytics

Building a Future-Proof Power BI Reporting Landscape in Banking

From Scattered to Structured Data Landscape

Business Analytics

Collibra Edge: Redefining Data Governance in Retail

Navigating the Unknown and Overcoming Migration Hurdles

Data Governance

Improving ETL Performance After Cloud Migration with Azure SQL Hyperscale

Reduce processing time for larger and more complex ETL workflows

Data Engineering

From Confusion to Clarity: Streamlining Issue Management

Better ticketing is a huge time gainer and cost saver

Data Governance

From Data Ambition to a Data-Driven Organization in the Energy Sector

Take the proper steps to become a data-driven organization

Data Governance

Reducing Risk and Ensuring Compliance with Data-by-Design Approach

Better business results thanks to the management of data assets throughout their lifecycle

Data Governance

Improving Marketing Productivity with a Cloud-First Data Platform

Step-by-step to achieve quick results without losing the longer-term goal

Business Analytics

Enabling Scalable Analytics with a Centralized Cloud Data Hub

Combine an event-driven cloud data architecture with serverless compute resources

Data Engineering

Business Analytics

Moving an On-Premise Data Warehouse into the Cloud

A more cost-effective and future-proof Azure cloud environment

Business Analytics

From Ad-Hoc Scripts to a Future-Proof BI Platform for a Telecom Provider

A future-proof BI environment fully trusted by end-users

Business Analytics

Supporting Large-Scale Cloud Migration in Telecom Through Data Governance

Data Governance delivers the transparency needed for a smooth cloud migration

Data Governance

Aligning Operations and Strategy with Business Intelligence in Industrial Gases

High quality decision making through better insights into data

Business Analytics

From Disparate Systems to Centralized Fleet Governance

More accessible insights into the governance of the vehicle fleet

Business Analytics

Enabling Brand Innovation with Customer Engagement Insights

Boosting brand awareness programs' visibility

Business Analytics

From Low BI Adoption to Self-Service Analytics in Retail

Building an engaged user community to obtain easy-to-share dashboards, entirely owned by our client

Business Analytics