LLM Data Security: From Business Risks to Responsible AI Innovation

4 November 2025

The rise of Large Language Models (LLMs) like ChatGPT and Google Gemini is transforming how businesses operate. From accelerating content creation to analyzing complex datasets, the possibilities seem endless. But as we embrace these powerful tools, a crucial question arises: what exactly happens to the sensitive company information you input into these tools? For many organizations, this is a gray area, with risks related to data security, privacy, and compliance.

This article explains how LLMs process your data, highlights the hidden dangers, and, most importantly, provides a practical governance framework to use AI securely and responsibly.

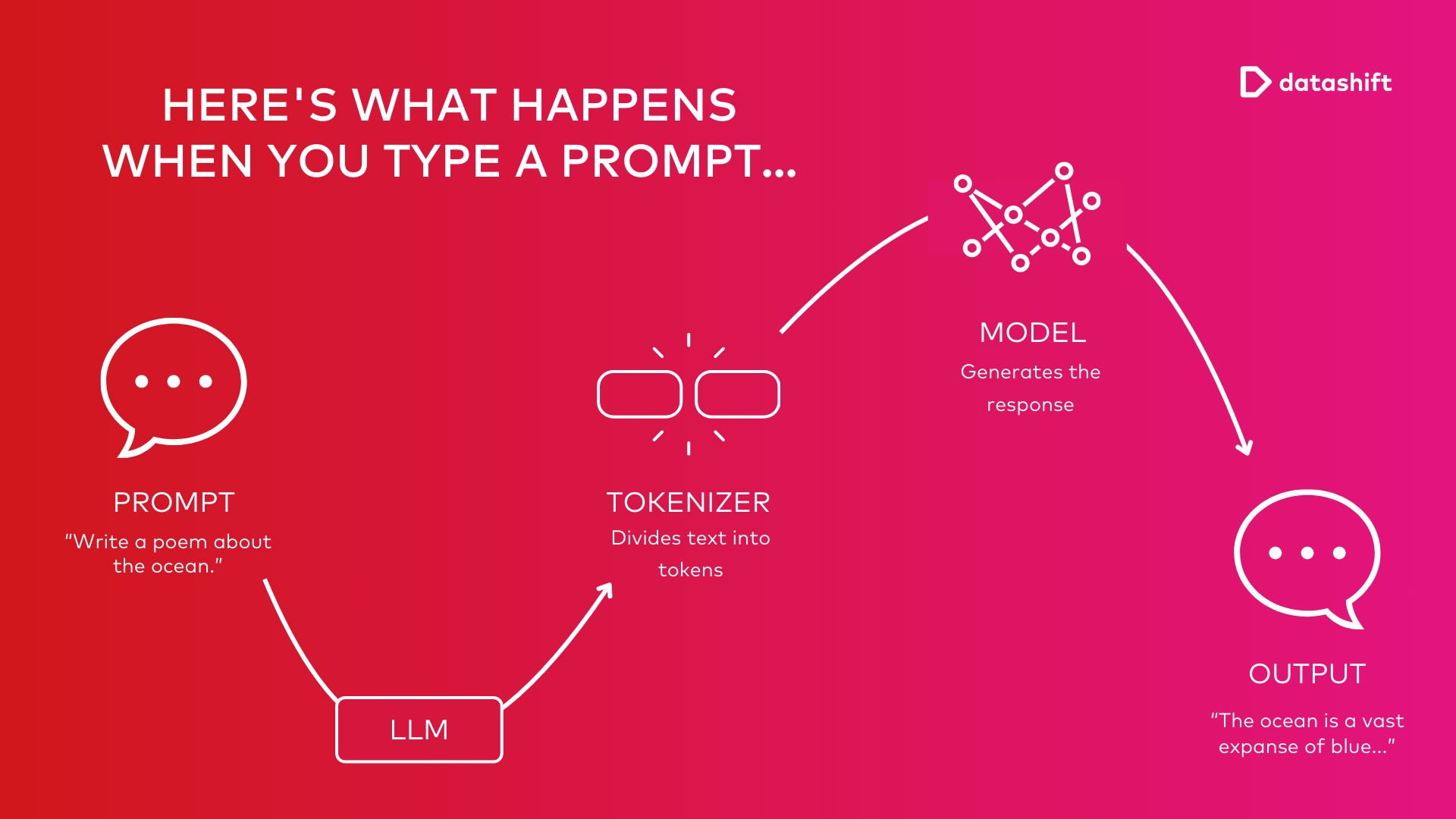

The Journey of a Prompt: What Happens to Your Input?

When a user enters a query or command (a 'prompt') into an LLM, the data undergoes a sophisticated process. At its core, an LLM is a type of neural network with a transformer architecture. This architecture processes data by breaking down the input into smaller pieces called 'tokens' and then performs mathematical equations to discover the relationships between them. This enables the model to see patterns much like a human would.

It’s important to distinguish between:

- Training Data: the massive dataset the model was originally trained on. Often scraped from a vast amount of data on the internet

- Your Input Data: the prompts and queries you provide during use. Your input is used to generate a specific response.

Where and How Is Your Business Data Stored?

Your input is typically not used to retrain the model directly. However, when using LLMs via an API (LLM-as-a-Service), providers often log prompts and responses temporarily (e.g., for 30 days) to detect abuse. Depending on the provider, you may be able to opt out of data retention or usage for model improvement.

The Hidden Dangers: Top LLM Data Security Risks

The uncontrolled use of LLMs can lead to significant risks. The primary dangers include:

- Leakage of Sensitive Data

Employees may accidentally input confidential data such as intellectual property, customer records, or financial reports. In some cases, this information can later surface in outputs to other users.

- Compliance and Privacy Violations

Sharing personal data with public LLMs may breach regulations like GDPR or CCPA, leading to heavy fines and reputational damage.

- "Shadow AI"

When staff use public AI tools without IT approval, they create “shadow AI” environments. This lack of oversight makes compliance and security nearly impossible.

- Manipulation and Cyberattacks

Techniques like prompt injection can trick models into revealing restricted information. Others, such as training data poisoning, manipulate the model’s behavior and compromise trust.

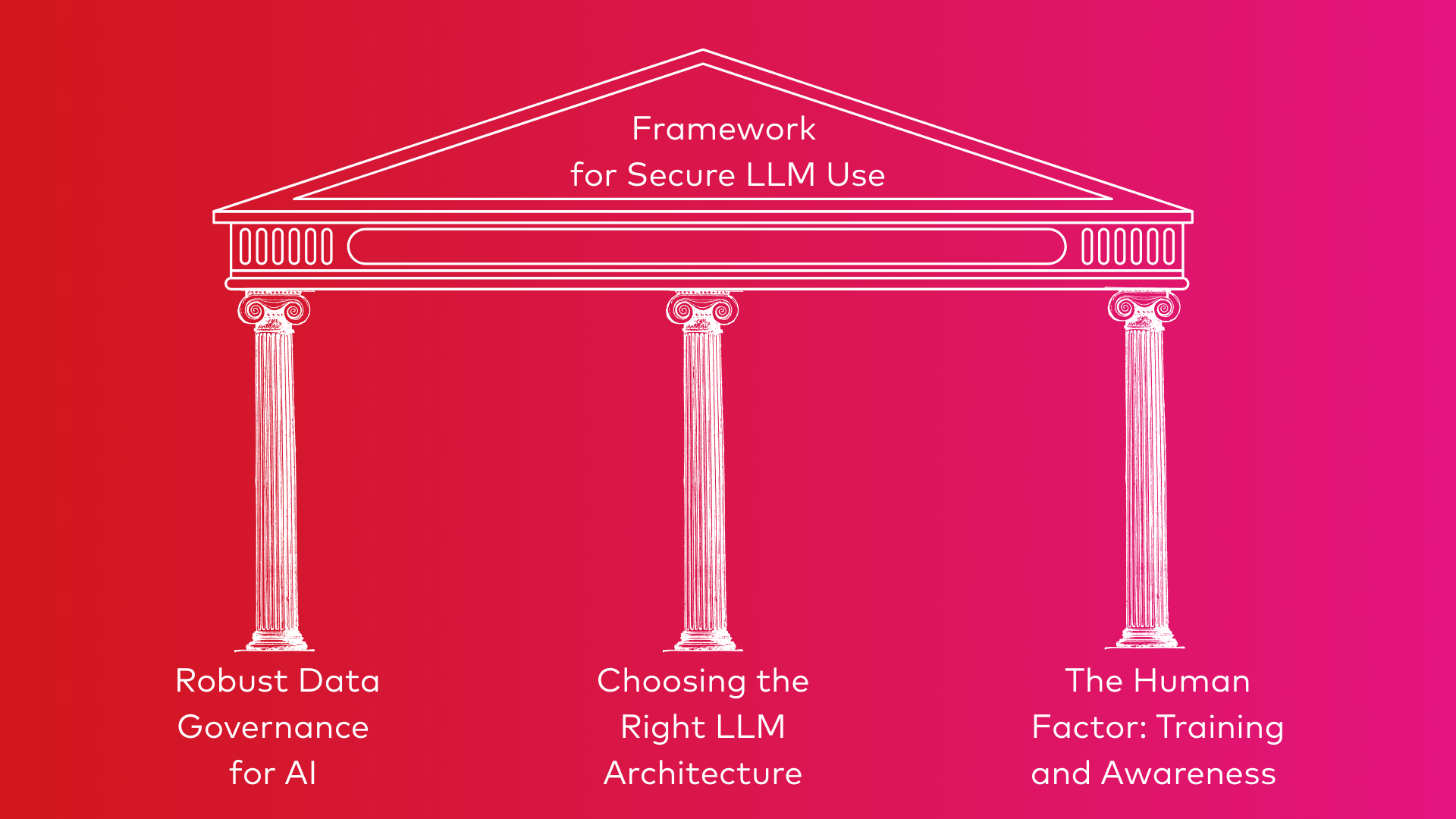

Building Your Defense: A Framework for Secure LLM Use

The solution is not banning AI, but governing its use responsibly. A strong framework balances innovation with data protection.

Pillar 1: Robust Data Governance for AI

A strong governance framework is the foundation for responsible AI use. This begins with establishing a clear and practical AI usage policy. This policy should include:

- Clear Usage Policy: Define what type of data is allowed in LLMs and what is prohibited.

- Data Classification: Help employees assess the sensitivity of information before using AI tools.

- Compliance Alignment: Ensure policies respect legal and ethical standards, including the EU AI Act.

Pillar 2: Choosing the Right LLM Architecture

How you implement an LLM has major implications for data security. Organizations have two main options:

- LLM-as-a-Service (via API): The most accessible option, providing access to a model from a provider like OpenAI or Google.

✅ Ease of use, scalability, and no infrastructure maintenance.

❌ Less control over your data. It's crucial to thoroughly review the provider's terms of service and data retention policies.

- Self-hosting: The organization runs an (often open-source) LLM on its own infrastructure (on-premise or in a private cloud).

✅ Maximum control over data, security, and compliance, which is essential for highly sensitive data and regulated industries.

❌Requires significant investment in hardware (GPUs) and specialized expertise to manage and maintain the models.

Pillar 3: The Human Factor: Training and Awareness

Technology alone is not enough; employees are the first line of defense.

- Training and Awareness: Organize training to inform staff about the risks and the company's AI policy. It's crucial that everyone understands why certain rules are in place.

- Practical Do's and Don'ts:

✅ Use an LLM to rewrite a marketing text.

❌ Paste a list of customer information into a public chatbot and ask it to analyze the data.

- 'Human in the Loop': Emphasize that LLMs are powerful tools, but not infallible experts. Critical review and human oversight remain essential to ensure the accuracy and safety of the output.

Secure LLM Adoption Through Responsible Innovation

LLMs are powerful enablers of efficiency and innovation. The key is not to fear them, but to manage the risks proactively.

By building a foundation of data governance, choosing the right LLM deployment strategy, and investing in employee awareness, organizations can unlock AI’s potential while safeguarding their most valuable asset: business data.

Want to know more? Explore our vast knowledge of blogs and articles on AI Governance or contact us to discuss how we help organizations integrate AI securely.