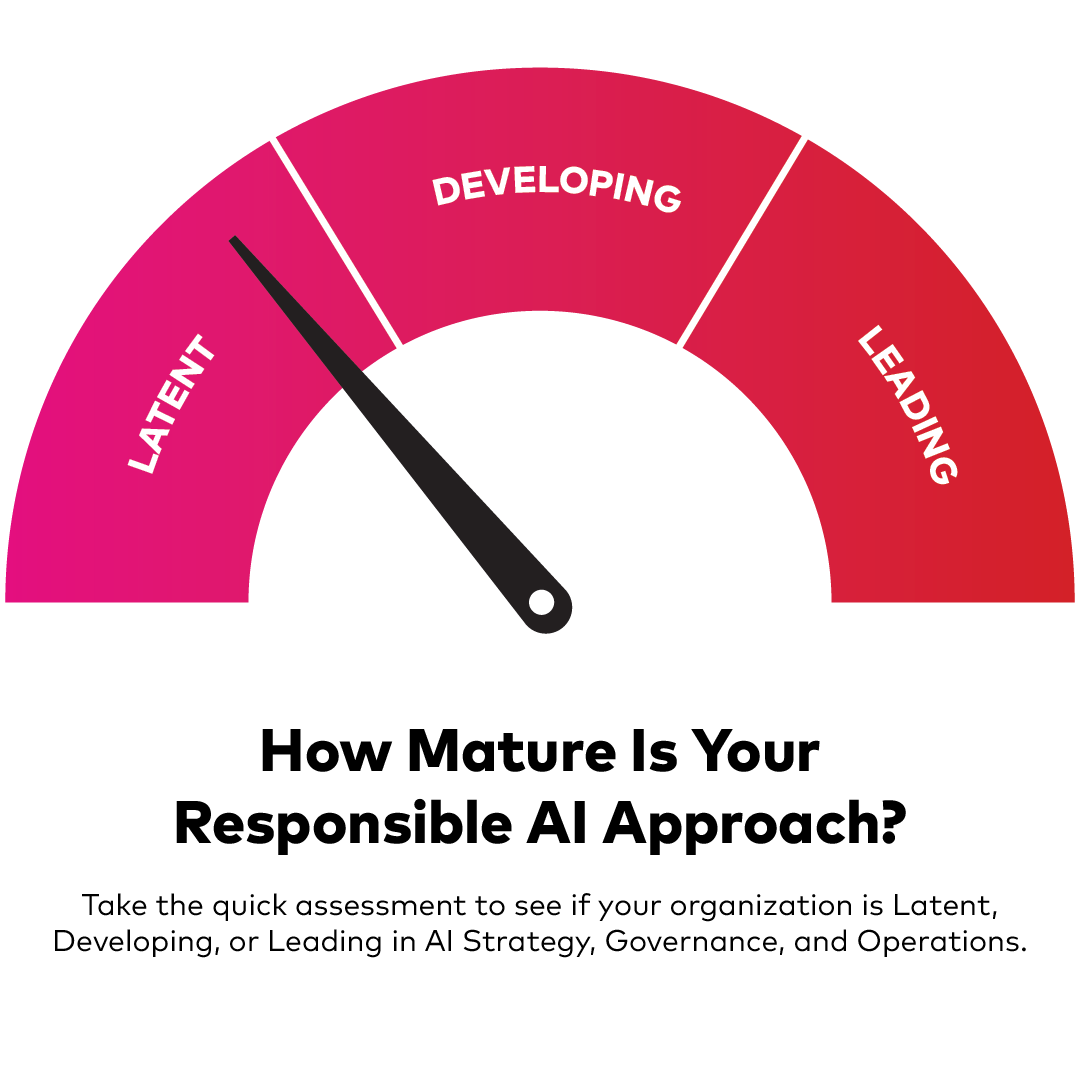

Responsible AI Maturity Assessment

Start the test nowArtificial Intelligence (AI) has advanced rapidly in recent years, unlocking new opportunities for businesses but also introducing new risks. AI systems can produce incorrect or biased outputs and pose safety, security, and societal risks.

As these risks grow, the need for responsible AI becomes more important. Responsible AI ensures that AI systems create value while minimizing harm—for users, organizations, and society. At its core, responsible AI is about risk management: identifying and mitigating risks. But it also involves defining an organization’s stance on key principles such as transparency, accountability, and privacy.

This Responsible AI Maturity Assessment helps you identify where your organization stands today. It provides insights into your current level of responsible AI maturity and highlights areas for improvement. In the end you will receive a personalized report describing your organisations status as well as some concrete recommendations to improve your responsible AI maturity. To get the most value, answer the following questions based on your organization’s current status, selecting the option that best describes your situation—even if none are a perfect fit.