Tackling AI Risks: Insights from assessing a GenAI Chatbot

8 July 2025

Accessing critical information quickly can make all the difference for many organisations. Our client, a health services organization, faced a challenge: their vast knowledge base of medical guidelines and instructions was difficult for doctors and nurses to navigate, slowing down decision-making. To address this, we developed a Retrieval-Augmented Generation (RAG) Q&A chatbot that allows employees to type questions and receive accurate, context-specific answers from the knowledge base in seconds. With a pilot launch planned in just one month, our client wanted to ensure the chatbot was safe, compliant, and reliable. Enter our AI Risk Assessment Workshop, a collaborative session led by our Responsible AI experts to identify and mitigate risks. In this post, we’ll walk you through how we addressed the client's challenges and why this process is critical for responsible AI deployment.

From Information Overload to Focused Solution

For the client's doctors and nurses, finding the right information in a sprawling knowledge base was a constant hurdle. This inefficiency directly impacted the speed of decision-making. An AI-powered chatbot seemed like the perfect solution, promising to deliver instant, precise answers. However, deploying new technology in a sensitive healthcare environment raised important questions. The client had valid concerns about the chatbot's reliability and its compliance with regulations like GDPR and the EU AI Act. Could they be sure the answers were accurate? And were there security vulnerabilities that needed to be addressed?

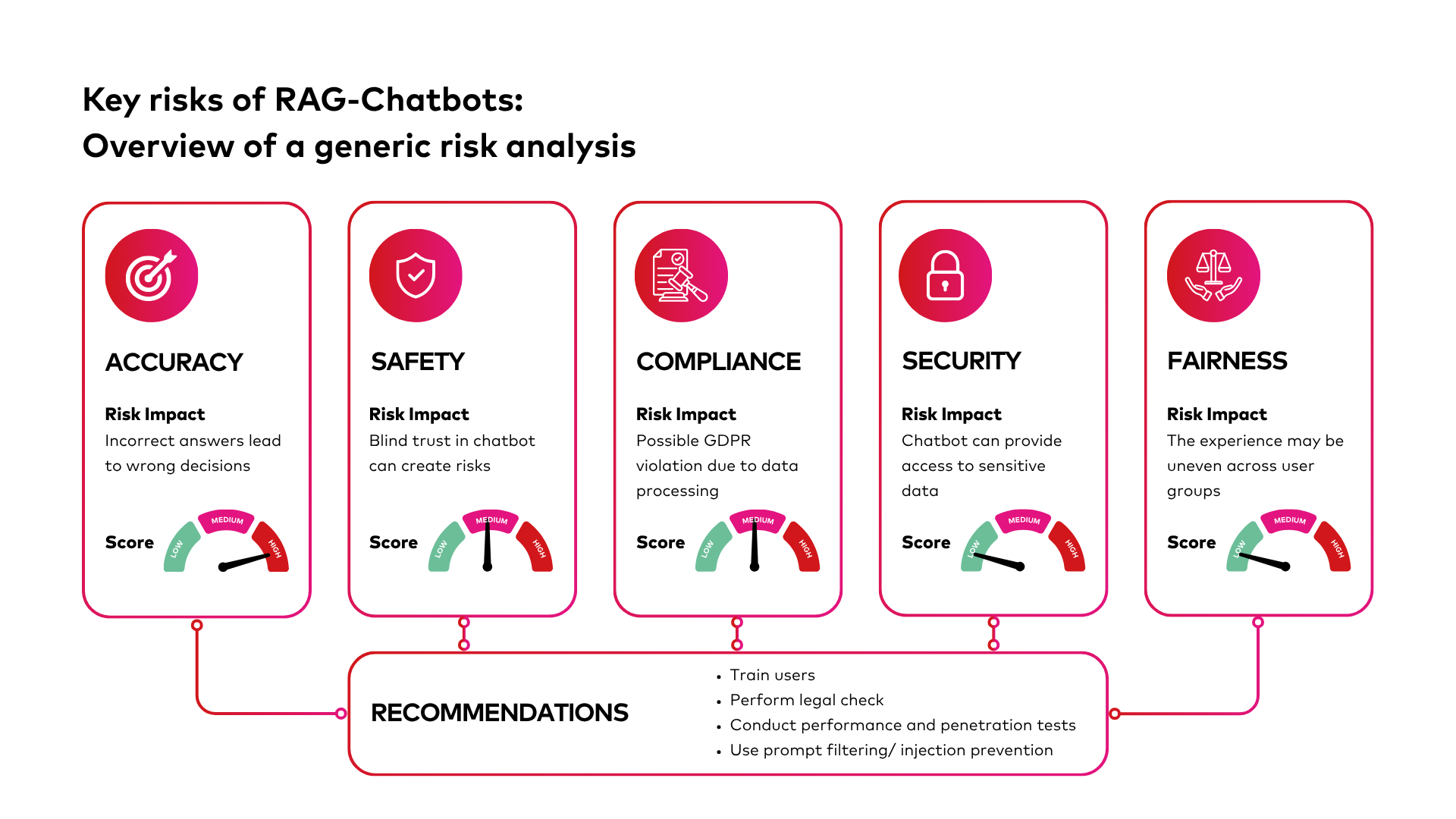

Estimating Risks to Define Clear Actions

To move from a promising concept to a responsible solution, we needed to address these uncertainties head-on. An AI Risk Assessment Workshop provided the ideal framework to do this. The goal was to systematically brainstorm potential risks, from accuracy to security, and then score them based on their likelihood and potential impact. This structured approach allowed us to prioritize what mattered most. A key to the workshop's success was bringing together a diverse group of experts: the chatbot’s developers, a Responsible AI specialist, the client’s project stakeholders, and their security and legal representatives. This collaboration ensured a comprehensive assessment, confirming that a variety of perspectives is crucial for identifying blind spots and building a robust plan.

The Result: A Clear Path to a Confident Launch

The workshop transformed uncertainty into a clear, actionable plan. It addressed the client's lingering questions and provided concrete mitigation strategies, such as enhancing the RAG model’s validation process, implementing additional security protocols, and ensuring strong user guidance. This left them with a clear understanding of the situation and the necessary next steps to proceed with a safe pilot launch. Most importantly, this collaborative process built a deep sense of trust and confidence that AI was the right solution to achieve their desired efficiency gains. The client is now empowered to move forward, ready to deliver a tool that will truly support their healthcare professionals.

Conclusion

Our AI Risk Assessment Workshop was a pivotal step in ensuring the success of our client’s RAG-based Q&A chatbot. By bringing together diverse expertise, categorizing risks systematically, and addressing client concerns, we laid the groundwork for a responsible and impactful pilot launch. At Datashift, we believe that responsible AI starts with collaboration and proactive planning. Ready to deploy AI solutions with confidence? Let’s work together to navigate the complexities of AI governance and deliver data-driven impact.